DL Compilation

The cs400 covers the rudimentary elements of Computer Science and aims to enhance the work-related self-efficacy of someone only with a degree in Mathematics. This first post is intended to celebrate the announcement of torch.compile in early March 2023. Pytorch 1.0 was released in 2017 essentially to provide Python API wrapper around C++ kernel.

I

1.1. Computer

Charles Babbage’s Analytical Engine (1837) is widely regarded as the first conceptual design of a general-purpose digital computer. It featured an arithmetic logic unit (ALU), control flow with conditional branching and loops, and dedicated memory modules. Building on this lineage, Alan Turing proposed the Turing Machine (1936), laying the theoretical foundation for analysing computation and forming the basis of modern CS by formalising the limits of what machines can compute. The Electronic Numerical Integrator and Computer (ENIAC) (1945), developed by John Mauchly and J. Presper Eckert, is arguably the first programmable electronic digital computer.

Although computers vary in size, processing power, and also purpose, they could still be categorised as follows: i) personal computers (PCs): are general-purpose systems used for tasks such as office productivity, web browsing, multimedia, and gaming; ii) mainframes: are optimised for high-volume transaction processing, large-scale database management, and mission-critical workloads that demand scalability and reliability; iii) supercomputers: offer extremely high computational throughput and are used for scientific applications such as climate modelling or molecular simulation; Programmers interact with computers by writing instructions that control hardware.

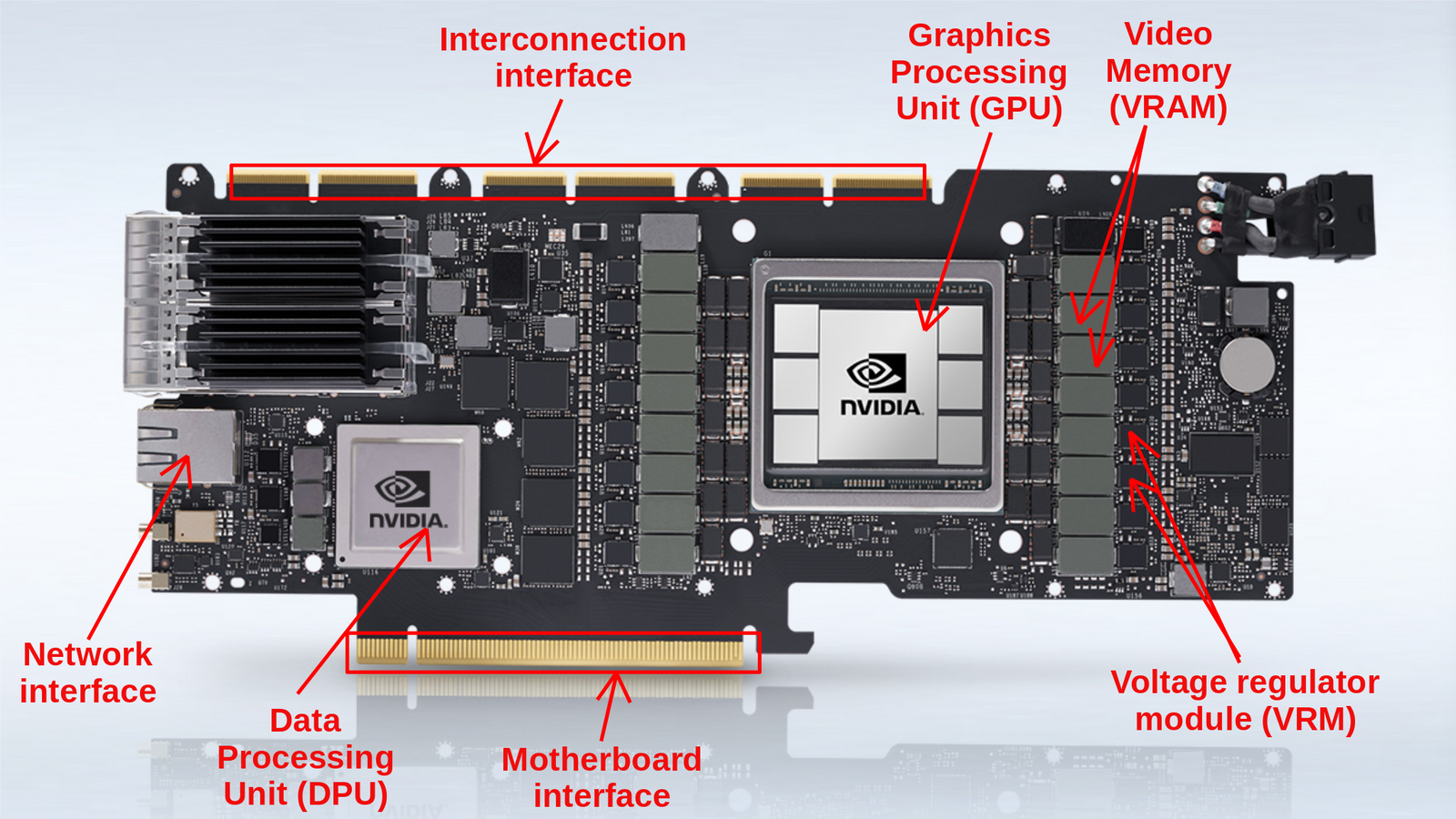

At a high level, the motherboard provides the physical circuitry for implementing the key components of the Von Neumann architecture (1945). It serves as a central hub connecting the CPU socket, RAM slots, storage interfaces, I/O ports, and expansion slots via buses and chipsets. Modern motherboards support add-in cards such as GPUs, sound cards, and additional storage controllers to enhance performance or capabilities. Firmware, such as BIOS or UEFI, embedded in the motherboard performs power-on self-tests (POST) and then invokes a bootloader (e.g. GRUB on Linux or Boot Manager on Windows), which loads the kernel into RAM from storage.

1.2. Central Processing Unit

A central processing unit (CPU or processor) executes instructions, known as programs, via the fetch-decode-execute cycle. Modern CPUs feature multiple cores (e.g. 4 to 64) along with a floating-point unit (FPU) for efficient real-number computation, while each core includes its own arithmetic logic unit (ALU) and control unit (CU). CPUs also possess dedicated memory modules. The fastest form of memory, called the register, temporarily holds data and instructions immediately required by the CPU, while its cache hierarchy, often comprising {‘L1’: smallest-fastest, ‘L2’: larger-slower, ‘L3’: largest-shared across cores}, mitigates latency from slower main memory.

Given clock speed refers to how quickly instructions are issued per second, a CPU’s word size refers the number of bits it can process in a single operation, and modern CPUs commonly employee 32-bit or 64-bit. Word size affects register width, data path size, and the design of the instruction set architecture (ISA), where instructions (represented in binary) abstract the underlying hardware and supply a software interface. Using data types that align with the native word size can improve performance and reduce instruction count, but programming languages (e.g. C) define only minimum type sizes (e.g. int $\geq$ 16 bits) to maintain portability across architectures.

For example, a 4-bit processor can handle only $2^4 = 16$ distinct values at a time, typically ranging from $[0, 15]$ or signed $[-8, 7]$, and merely support a limited instruction set and address space. In contrast, a 32-bit CPU (theoretically-) uses 32-bit addresses, allowing the CPU to access $2^{32}$ B or 4 GB of memory (i.e. some are reserved for OS). Thus, a larger word size allows the CPU to operate on wider data types and address larger memory spaces, but increasing word length also complicates circuit design and may even lead to unoptimised performance. Modern CPUs use techniques such as pipelining and branch prediction to maximise instruction throughput.

1.3. Random Access Memory

A computer memory is essentially an array of bytes each with a unique address. Random access memory (RAM), register, and cache however differ in speed and width. The latency of dynamic RAM (DRAM) can be estimated as $T_{\text{precharge}} + T_{\text{row activation}} + T_{\text{col. access}}$. That is, the physical design of memory cells matters, and the optimal fetch is achieved by retrieving contiguous data in an active row. Static RAM (SRAM), despite its higher costs, is better suited for cache memory due to its lightning speed and stability. A well-designed program (e.g. contiguous memory allocations) can achieve a higher temproal and spatial locality, which then improves cache hit rates.

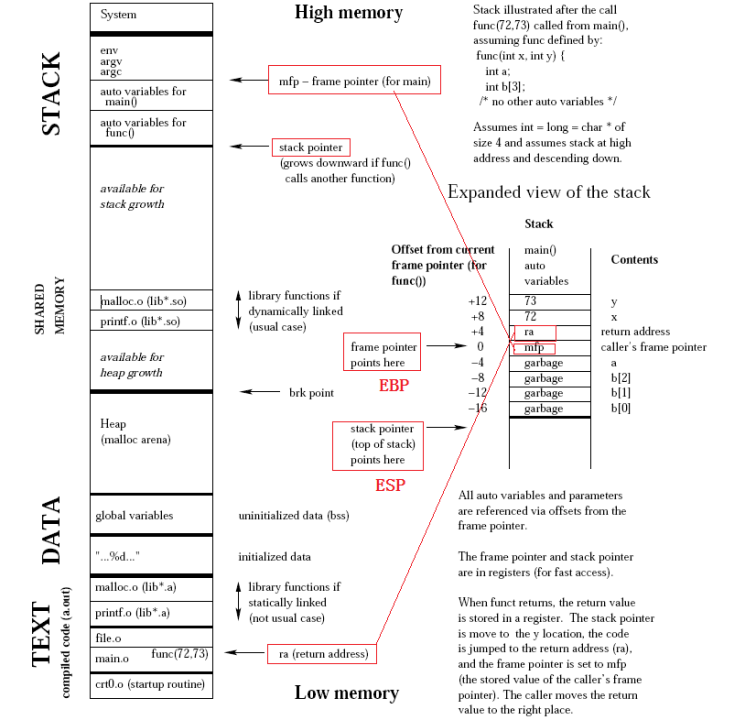

An instance of a (user-) program, known as a process, resides in RAM during execution. The text segment of the process, as depicted in its memory layout, contains the program’s executable code, comprising machine instructions (e.g. 4-bit, 8-bit, 32-bit) that the CPU fetches and executes. To protect the integrity of the program and prevent accidental or malicious alterations, the segment is typically marked as read-only, and moreover, many OS implement hardware-backed security mechanisms, such as execution prevention (e.g. DEP or NX bit), to ensure that this code cannot be executed from unintended regions of memory (e.g. stack, heap, or data segments).

Function calls and local variables are handled on the stack which follows a last-in, first-out (LIFO) structure. Each function call, with an address managed by a stack pointer, pushes a stack frame onto the call stack. Stack operations do not require system calls because stacks are preallocated at compile time, which improves predictability and cache locality. In contrast, heap allocations are used for dynamically sized data such as variable-length arrays or objects (e.g. linked lists). The region is unordered and (de-) allocations occur in arbitrary order. These operations require system calls, while being more prone to fragmentation, cache misses, and memory leaks.

1.4. Persistent Storage

Persistent storage typically refers to hard disk drives (HDDs) or solid-state drives (SSDs). Both are non-volatile, and so can retain data without constant power supply. HDDs are mechanical in which the physical movement required in reading data yields slower access, whereas SSDs use electronic signals for much faster access. In recent years, many computing devices released have featured non-volatile memory express (NVMe) SSDs, which operate over the peripheral component interconnect express (PCIe) interface, enabling direct communication between the SSD and CPU. Flash memory, similar to SSDs, is also commonly used for storing applications.

A disk can also be used as virtual memory, via paging or segmentation, to free up RAM for a process. But, excessive swapping leads to thrashing….

1.5. I/O Device

I/O devices allow beginners to manipulate complex systems without requiring deep knowledge of binary encoding or CPU instructions. In particular, {I: ‘keyboard’, O: ‘monitor’} are equipped for communications between a computer and its external environment. Each key press is converted into a corresponding binary using encoding standards. For instance, the unicode transformation format-8 (UTF-8) is a variable-length standard representing over a million codepoints as sequences of 8-bit bytes. This is a superset of all characters in widespread use today, including those defined by Hex and ASCII (i.e. 7-bit encoding standard supporting $2^7 = 128$ English letters).

Programmers interact with computers through programming. At the lowest level, assembly languages (e.g. x86, ARM, MIPS) are used because it is more human-readable. The syntax corresponds to the instructions defined by an ISA, translating mnemonics to machine instructions: {‘ADD’: { Hex: 0x8, Semantic: A $\gets$ A $+$ B }}. Low-level languages like C (C++?) offer greater abstraction while preserving hardware control, with compilers translating source code into executable files. Finally, high-level languages, such as Python, Java, and Golang abstract away hardware details entirely, relying on interpreters and/or JIT compilers to compile and then execute code.

The choice of programming languages often comes down to execution speed and the types of projects. Compiled languages such as C++ and Rust are preferred for tasks requiring high performance and low latency (e.g. game engines, real-time systems). In contrast, interpreted languages such as Python, JavaScript, and Ruby are used when implementation speed and flexibility are critical (e.g. web development, data analysis). Said differently, the execution model—whether interpreted, compiled, or JIT-compiled—hugely influences the choice of language. More details are discussed below regarding the execution model context of machine learning (ML).

II

2.1. Graphics Processing Unit

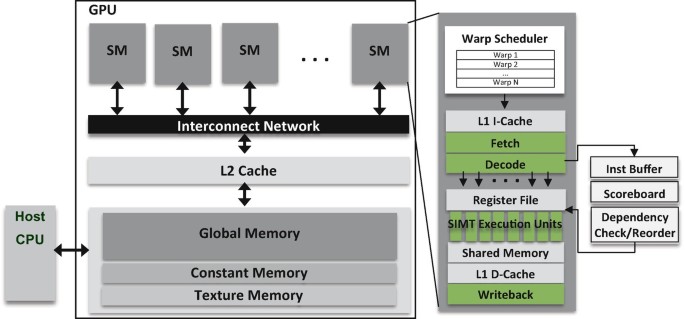

A graphics processing unit (GPU) is an integral part of a graphics card, built for single instruction, multiple threads (SIMT) execution of relatively simple and repetitive tasks. GPUs feature a way more cores operating at lower clock speeds, compared to CPUs, delivering high computational throughput and efficiency in conjunction with the high-bandwidth memory. Floating point operations per second (FLOPS) measures the GPU’s computational capacity, while floating point operations (FLOPs) indicates the computation demands of neural networks (NNs) which, in the case of LLMs, are hugely influenced by the number of parameters and amount of training tokens.

Similar to CPUs, a streaming multiprocessor (SM) serves as a core of the GPU which executes a thread block. Given that a thread is the smallest unit of execution that handles a portion of tensor operation (e.g. element-wise multiplications), an warp is a set of threads organised in a uniform size to be following the SIMT model, and multiple warp schedulers facilitate the switching between warps within the SMs whenever a core stalls. A block is a collection of warps assigned to each SM. More blocks than a number of SMs should be properly dispatched in modern GPUs to maximise utilisation and ensure higher throughput. This is known as latency masking.

2.2. CUDA & Tensor Core

A CUDA core in a SM is a general-purpose processing units, essentially an ALU, designed for parallel computing across thousands of threads. In particular, each CUDA core typically handles one instruction per cycle per thread, supporting both FP and INT operations for basic arithmetic ($+$, $\times$) and control flow ($\land$, $\lor$, $\neg$). The CUDA cores are absolutely well-suited for graphics rendering, simulations, and other general-purpose parallel workloads, but they still lack abilities in handling specialised mathematical operations, including a general matrix mutiplication (GEMMs). Consequently, they must be supplemented by other components of a GPU to overcome these.

A tensor core, introduced in the Tesla V100, is a specialised hardware unit designed for 64 mixed-precision (i.e. FP16$\,\times\,$FP16 → FP32) matrix operations per clock cycle, often known as fused multiply-add (FMA). Given that the back-propagation can be sensitive to low bit-width data formats such as FP16 as gradient values may fall outside the representable range, Micikevicius et al. (2017) i) kept the weights in an FP32 master copy; and (ii) up/down-scaled gradients via a scaling factor; to avoid underflow (i.e. zero clipping). Pytorch 1.6+ and a CUDA-capable GPU supports the package for automatic mixed precision (AMP): ‘@torch.autocast’ and ‘torch.GradScaler’.

2.3. High-Bandwidth Memory

Its memory hierarchies are also optimised for high throughput and low latency. For instance, the V100 has integrated shared memory (SMEM) and L1 cache, allowing L1 cache to enjoy SMEM’s high-bandwidth performance and low-latency. Both SMEM and L1 cache store more frequently accessed data to reduce the need for slower global memory access (i.e. VRAM). On the other hand, L2 cache, an off-chip memory can be shared across all SMs. They facilitates efficient data flow between SMs and the high-bandwidth memory (HBM), and ultimately enhance throughputs to L1/SMEM by enabling quick access to larger datasets in VRAM.

A high performance of HBM is essentially attributed to its vertical stacking architecturem. As shown below, several DRAM dies layered on top of each other and interconnected by through-silicon vias (TSVs). However, an NVIDIA’s architect also agreed that the memory bandwidth cannot fully support the peak TFLOPS of their GPUs. For example, the highest bandwidth of the A100 is theoretically 9,750 GB/s (64 bytes/SM × 108 SMs × 1410 MHz), yet HBM2’s 1,555 GB/s is 6.3 times less than what the SMs request. CUDA developers hence must pay attention to data access pattern because CUDA (i.e. GPU’s C) does not enforce the contiguous memory allocation.

2.4. GPU Architecture

For instance, the A100 GPU has 7 graphics processing clusters (GPCs). Each GPC contains 6 texture processing clusters (TPCs), and each TPC has 2 SMs. Each SM includes 64 CUDA cores, 4 tensor cores, 4 warp schedulers, and 256 KB of local registers along with 192 KB of L1 cache/SMEM. The memory hierarchy features a 40 MB L2 cache and 40 GB of VRAM leveraging a 5120-bit HBM2 interface with 5 stacked modules, delivering a bandwidth of 1.55 TB/s. For interconnects, the A100 supports NVLink Bridge (600 GB/s for 2 GPUs) and PCIe (64 GB/s), and also multi-instance GPU (MIG) technology, allowing up to 7 instances per GPU.

A100_for_illustration = {

graphics_processingc_cluster: [ # i.e. repeat for 7 GPCs

{

name: GPC1,

texture_processing_cluster: [ # i.e. repeat for 6 TPCs/GPC

{

name: TPC1,

"streaming_multiprocessor": [ # i.e. repeat for 2 SMs/TPC

{

name: SM1

"cuda_cores": 64,

"tensor_cores": 4,

"warp_schedulers": 4,

"sm_local_memory": {"registers": "256 KB/SM", "L1_cache/SMEM": "192 KB"}

},

]

},

]

},

],

memory_hierarchy: {

"L2_cache": "40 MB"

"VRAM": {"interface": "5120-bit HBM2", "num_stacked": 5, "memory": "40 GB", "bandwidth": "1.55 TB/s"},

},

interconnect: {NVLink-Bridge for 2 GPUs: 600 GB/s, PCIe: 64 GB/s}

multi_instance_gpu: {support: True, max_num_instances: 7}

}

2.5. Interconnection

An interconnect is a communication link that enables data transfer between components within a computer system. In the context of GPUs and CPUs, interconnects allow different parts of a system—like processors, memory, storage devices, and expansion cards—to communicate efficiently. They can be physical connections like cables or circuit pathways on a motherboard, or even software protocols that manage how data flows between components. For example, tech like NVIDIA’s NVLink is an inter-GPU and GPU-CPU Interconnects, managing data transfer across various types of multiple processing units, and allowing GPUs to efficiently share data.

System interconnects link the broader components of a system, such as processors, memory, storage, and expansion cards. Common interfaces like PCIe supports high-throughput connections essential for tasks that depend on rapid access to storage or network resources, maintaining data flow throughout the entire system… A large model may not fit into VRAM and force it to be distributed across …

III

3.1. Compiler Framework

Low-level virtual machine (LLVM), released in 2003 by Chris Lattner, is a compiler and toolchain set designed to minimise the need for language-hardware-specific compilers. Given that a compiler pipeline generally consists of front-, middle-, and back-end, LLVM takes an intermediate representation (IR) from a front-end, apply multiple optimisation techniques, and emits an optimised IR in one of these extension: i) human-readable assembly (.ll); ii) bitcode (.bc); and iii) C++ object code (.o); LLVM follows the static single assignment (SSA) form to simplify the analysis of dependencies among variables, and so allows an efficient program optimisation-in-compilation.

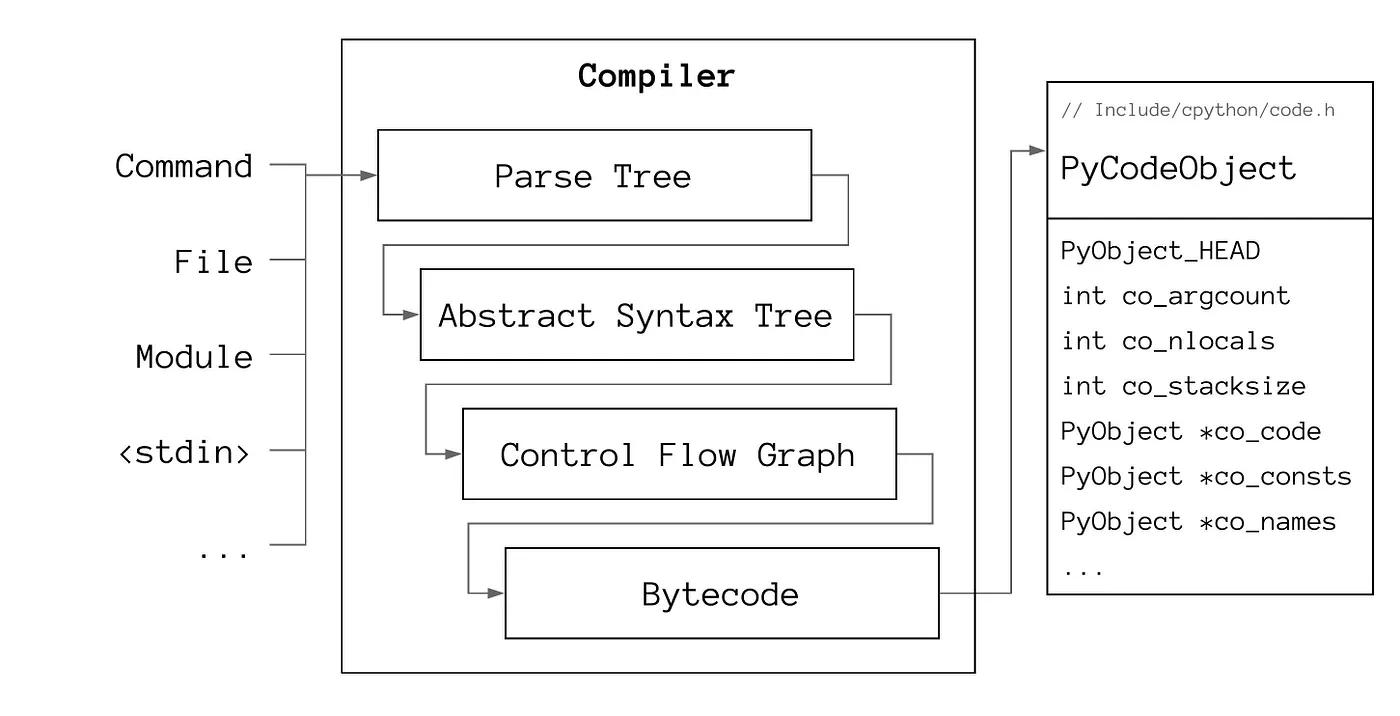

In practice, a front-end compiler makes an IR with i) lexical analyser (Lexer): breaks the source code into tokens (e.g. keyword, literal, identifier); ii) parser: builds an abstract syntax tree (AST) based on the analysed tokens and the language’s grammar to represent the hierarchical structure of the source code; iii) semantic analyser: further ensures correctness of the program beyond syntax (e.g. type checking, variable declarations, and scope resolution); and iv) IR generator: transforms the examined source code into a universal IR; Modern C compilers (e.g. Clang, GCC) are self-hosting (i.e. also written in C/C++) and were developed via bootstrapping processes.

Subsequently, the LLVM Core takes a role in IR optimisations (e.g. dead code elimination), and a back-end can compile the outputted IR ahead-of-time (AOT) (i.e. llc cmd), or execute it directly using a just-in-time (JIT) compiler (i.e. lli cmd). However, as the expressive power of an IR and its ability to capture high-level semantics largely determine the sophistication of a front-end compiler, a lot of high-level languages have developed their own AST for associated infrastructures (e.g. Swift-SIL, Rust-MIR, Pytorch-TorchScript), and this led to the unnecessary coexistence of various modules (i.e. performing similar tasks) across languages and/or hardwares specific IRs.

The multi-level intermediate representation (MLIR), located in llvm-project/mlir, is a framework placed between a language’s AST and LLVM IR, enabling different levels of abstraction to coexist. While MLIR is a powerful representation, it does not intend to provide low-level machine code (e.g. .exc file) generation algorithms (e.g. register allocation, instruction scheduling), as such tasks are handled by lower-level optimisers (i.e. LLVM). Nor does it intend to serve as a source language for user-defined kernels, analogous to CUDA C++. MLIR uses dialects to represent the semantics of both general-purpose and domain-specific languages (DSLs) as first-class entities.

One has to define domain-specific operations, types, and attributes in MLIR by extending the IR to make a custom dialect. Specifically, it involves i) specifying the dialect using MLIR’s C++ API or table-driven declarative rewrite rule (DRR), ii) defining the semantics of the new operations, and iii) integrating them into the MLIR framework. Numerous custom dialects illustrate MLIR’s adaptability, including the Standard dialect for general-purpose computation (e.g. arithmetic), the LLVM dialect for LLVM IR interoperability, and the Tensor and Linalg dialects for some maths. The GPU and SPIR-V dialects are well-known domain-specific dialects for hardware acceleration.

3.2. Interpreter (w/wo JIT)

Cross-compiling has become more accessible with LLVM, but despite extensive research, compiler development remains challenging and limits their widespread use. Accordingly, interpreters have gained popularity among ML researchers who favour iterative and also experimental workflows. Modern interpreters compile source code into bytecode, which is i) hardware-agnostic: independent of the varing ISAs; and so ii) executable on any platform (e.g. Linux, macOS, Window) with a compatible virtual machine (VM); The VM in the context often provides an abstraction layer to execute the bytecode by simulating a standardised runtime environment over an OS.

The CPython compiler’s design is outlined in PEP 339 (i.e. withdrawn), but in essence, it converts source code (i.e. .py) into bytecode (i.e. .pyc), that consists of operation codes (opcodes) and corresponding operands (i.e. if exist). Opcodes are lower-level instructions, and operands represent various entities depending on the context of opcode. Operands can vary from literal constants or immutables (e.g. number, string, tuple), variable or attribute names, or code blocks (e.g. function, class, comprehension). Similar to how assembly instructions like pop, push, and ret are represented numerically, each operand corresponds to an integer (i.e. dis.opname[int]).

CPython continues to improve its efficiency via various optimisations. Regarding opcodes, the adaptive interpreter, introduced in PEP 659, dynamically replaces generic opcodes with specialised, faster versions at runtime based on profiling data. It reduces overhead for frequently executed instructions, and so significantly enhances execution speed. One can attempt to disassemble opcodes using the dis module or examine further through the CPython source code: [Include/opcode.h, Python/opcode_targets.h, Lib/opcode.py]. In short, Bytecode, formed by combining opcodes and operands, serves as an IR which the CPython interpreter can efficiently execute.

The _PyAST_Compile function in Python/compile.c is the main entry point for compilation. This generates a PyCodeObject struct that represents a chunk of executable code that has not yet been bound into a function. The struct encapsulates i) co_code: the sequence of opcodes and operands representing bytedcode instructions; ii) co_consts: constants (e.g. number, tuple) or nested PyCodeObject instances (e.g. function, class, comprehension); iii) co_names: variable and attribute names; iv) co_varnames: local variable names; v) co_freevars / co_cellvars: variables used in closures; vi) co_filename / co_name: the source file and code block name; and others;

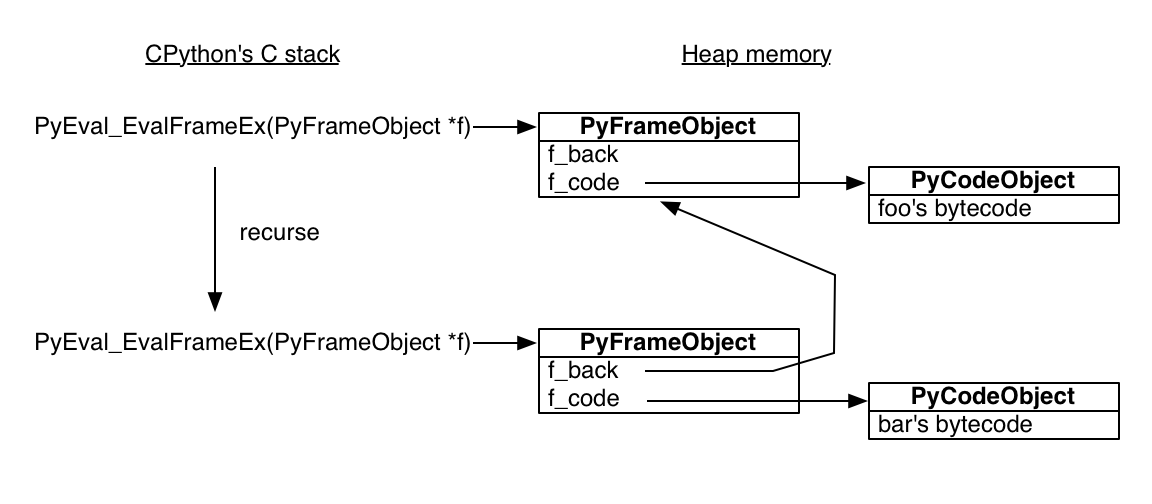

The Python virtual machine (PVM), a stack-based interpreter, then executes the compiled bytecode at runtime by simulating a CPU’s execution model (i.e. stack machine). The (legacy API-) PyFrame_New in Objects/frameobject.c emits a PyFrameObject that models a stack frame, representing the execution context of a function call. A frame object has i) f_code: a reference to the PyCodeObject; ii) f_back: a path to the last frame; iii) f_locals: local variables of the function; iv) f_globals: accessible global variables; and others; Note that f_back forms a linked list of PyFrameObject pointers, while one can examine objects and frames with the inspect or sys modules.

The _PyEval_EvalFrameDefault in Python/ceval.c serves as the main interpreter loop and, as specified in PEP 523, it is configurable. All Python objects and data structures are heap-allocated by construction. A PyCodeObject act as a blueprint, and a PyFrameObject holds the execution context for the function call, so the call stack itself is handled in the traditional stack memory. **In recent updates, Python 3.11 splits the stack frame object into: a PyFrameObject and a struct _PyInterpreterFrame. Also, Python 3.12, as proposed in PEP 709, inlines comprehensions into the code rather than compiling them as nested functions that isolate iteration variables.**

Compared to OG interpreters which traversed ASTs recursively during runtime (and suffered from slow execution), the introduction of a VM and bytecode input significantly improved performance and portability. ASTs are well-suited for representing program structure but are not optimised for efficient execution. On the other hand, a lower-level IR allows more sophisticated optimisations and custom implementations. For instance, PEP 3147 introduced the .pyc repository directory, known as __pycache__, to better organise files and improve cross-version compatibility. CPython can skip recompilation if an up-to-date .pyc file is present in the directory.

Ultimately, JIT compilers appeared to further accelerate execution speed. Given that AOS (i.e. general-case) compilation can be very slow, as Omer Iqbal mentioned in his presentation, traditional JIT compilers that can be found in other languages, such as Java (JVM) or JavaScript (V8 engine), employ multi-tiered optimisation strategies. These tiers, such as the ‘warm’ or ‘hot’ stages, adeptly detect and categorise frequently executed code paths, while the code regions will be compiled into machine code during runtime. CPython, the reference-only implementation of Python, lacks the feature, but one can find other implementations incorporating JIT compilation.

3.3. GPU Programming

Compute unified device architecture (CUDA) is a parallel computing platform featuring compilers, libraries, and developer tools. It was created in 2006 by NVIDIA and embedded within C/C++ as a DSL for programming their own GPUs. NVIDIA CUDA Compiler (NVCC), which uses NVVM (i.e. their derivatvie of LLVM), compiles a .cu into a i) parallel thread execution (.ptx) human-readable assembly; or ii) directly executable CUDA-specific binary (.cubin); This .cu extension allows NVCC to delegate the compilation of host code (i.e. CPU-related: #include) to Clang, while focusing on device code (i.e. w/ CUDA extension: __global__, or __device__) compilation.

CUDA programming in C/C++ is generally more cumbersome due to the need for manual handling of: i) memory coalescing; ii) SMEM synchronisation and conflict management; iii) scheduling within SMs; and iv) scheduling across SMs; Thus, OpenAI introduced Triton in 2021 as Python-based framework and DSL, making it easier for ML researchers and practitioners to write optimised GPU code (i.e. primitive tensor operations). Built on top of the LLVM-MLIR infrastructure, Trition automates the first three challenges inherent to the SIM”T” model by enabling tile-based programming with multi-dimensional blocks (i.e. rather than operating on individual “T”hreads).

The Triton compiler captures the AST of a kernel, that is written in triton.language and also decorated by @triton.jit, and progressively generates IRs through MLIR dialects: i) Triton Tensor Graph IR (TTGIR): a hardware-agnostic IR for high-level opt.; ii) Triton-GPU IR: a more optimised hardware-specific IR for efficient GPU code generation; and iii) LLVM IR: LLVM lowers the IRs into an executable; While it offers finer control over SMEM and HBM compared to eager mode PyTorch (i.e. had the C/C++ backend), the throughputs of the fused softmax in Trition seems higher than both the F.softmax and JIT-compiled (i.e. TorchScript) PyTorch-native implementation.

3.4. PyTorch 1.x

PyTorch (PT) is an open-source deep learning (DL) framework developed by Facebook (-evolved from the Torch7 library). TensorFlow began with static computation graph (SCG) for finer serialisation and deployment, but PT 1.x operate with dynamic computation graph (DCG) as they sincerely value flexibility in modelling. A torch.Tensor class is the data structure representing homogeneous, multi-dim. rectangular arrays. Attributes abstrated by the class, [requires_grad, grad, grad_fn], are one of a core of automatic differentiation (AD) engine alongside a DCG. Other key attributes, [size, stride, dtype, device, layout], describe shapes in memory, similar to numpy.array.

PT 1.x. has many built-in Function classes (e.g. AddBackward0, MulBackward0), for instances and/or subclasses of torch.Tensor (e.g. nn.Module.weight, torch.nn.Parameter), to enable gradient computation in backpropagation. A new operation can also be defined via torch.autograd.Function. When PT dynamically builds a DCG at runtime, torch.Tensor instances and torch.autograd.Function objects become the nodes and the edges, both of which represent data flow and logic of the program. Tensor.register_hook can be invoked in the backward pass to inspect, modify, and/or visualise gradients as they are being computed within a neural network (NN) model.

While the Python API primarily served as a high-level interface which delegates tasks to lower-level modules designed for AD or basic tensor operations, PT 1.x rely on its C++ backends to address the slow executions associated with eager mode. In particular, A tensor library (ATen) is a C++ wrapper for C and CUDA libraries, such as the math kernel library (MKL), CUDA deep neural network (cuDNN), and CUDA basic linear algebra subprogram (cuBLAS). However, the lowest-level modules handle primitive tensor operations without native AD supports, and thus ATen incorporates an autograd engine, preserving flexibility while leveraging the performance of CUDA.

Alban Desmaison commented: “Given that Torch7 (i.e. written in Lua) relied on these C/C++ libraries: Tensor Handling (TH/THNN) and Tensor Handling CUDA (THC/THCUNN being CUDA dependent) for CPU/GPU computing, i) the initial PT is simply a Python wrapper + new autograd engine + TH*; ii) Aten was developed later to provide more preferred C++ interfaces, resulting in new functionalities in PT 1.x being implemented within ATen rather than TH*; iii) LibTorch is the distribution of PyTorch, a replacement and/or supplmenet for the Python wrapper (i.e. which used Pybind11 to wrap ATen), enabling more direct access to the core backends through the C++ API”.

Script mode can achieve higher throughputs at inference. They implemented TorchScript (TS) on top of LibTorch to ace deployments, while preserving a clean UX with two APIs: torch.jit.trace and torch.jit.script. The former traces a model on some proxies and records tensor operations, whereas the latter parses the entire source code, that is, both produce a SCG of potentially different regions being captured. An TS-compiled Python program can be either executed at the PT JIT runtime, or, exported into non-Python env. such as the Open Neural Network Exchange (ONNX) format which others shall comply with to implement a workflow: “train.py $\to$ deploy.cpp”.

3.5. PyTorch 2.0

However, TS does not support some libraries or Python features (e.g. generator, comprehension), but forces incompatible modules to be either wrapped/avoided. TS-compiled models also loss some of its eager charm (i.e. eager execution flexibility). The torch.compile API is a new domain-specific JIT compiler, advertised as Pytorch 2.0 (PT2). It still converts PyTorch-related regions into a SCG (i.e. as TS), but can continue to interpret arbitary Python code and reamin “pythonic”. They reported this dynamic trace (unlike TS-“once and for all”) works 93% on the 163 open-source models, and the (partially-) compiled model runs 43% faster in training on the A100.

TorchDynamo (i.e. Dynamo) is the front-end compiler designed for Python-level graph acquisition. It leverages PEP 523 (i.e. the frame evaluation API) and functional transformation (FX) toolkit. Dynamo initially i) hooks into bytecode execution; ii) analyses bytecode with proxies and identifies torch.* and non-torch.* calls; and iii) transform compilable regions as an FX graph (i.e. torch.fx.GraphModule) of Torch IR; E.g. If a mock .py of {[torch.* ops, torch.tensor]: TorchVariable, Py_builtin_variables: BuiltInVariable, Py_lists: ListVariable}, then the operations on a TensorVariable adds a FX node, which can be drawn by torch.fx.symbolic_trace (or, torch._dynamo.export).

Graph breaks can occur when Dynamo encounters complex behaviours, such as dynamic control flow, refering to situations where the path of execution depends on runtime data (e.g. new inputs). For example, if a program has condition = lambda x: x.mean() > 0.5, that relies on the values held in x: torch.Tensor, then the corresponding region if condition(x): return x / 1.1; return x / y; will be represented by two FX graphs. Dynamo must trace all FXs for higher compilability, but frequent graph breaks often mean performance hit because the runtime checks introduced by guards can increase latency, which we want to avoid especially in situations like inference pipelines.

A set of guards, which act as runtime checkpoints, collectively validate that the conditions under which the FX graphs were compiled remain consistent during execution. For instance, a shape guard triggers recompilation if a shape of tensor is shifted by a new batch size, causing the cached graph to be discarded and the function retraced. Dynamo employs various types of guards, including those for types, constants, and nn.Module structures. These guards would also ensure the correctness of AOTAutograd (i.e. on a BWD FX). APIs such as torch._dynamo.explain and torch.fx.graph_module.GraphModule.print_tabular are helpful for diagnosing graph breaks.

High-level FX graphs (i.e. Torch IR) produced by Dynamo accommodate guards, making them challenging for downstream compilers to process. Also, the nodes in FX graphs are missing a grad_fn to be .backward-callable, while separate graphs for a backward pass (i.e. BWD), that are tightly coupled to the efficiency of the forward pass (i.e. FWD), are not yet constructed. AOTAutograd (i.e. AOT) under the torch._functorch.aot_autograd module addresses this by i) decomposing all IR nodes into functional operators; ii) capturing both FWD and BWD graphs (i.e. ATen IR); and iii) sending them to hardware-specific back-end compilers using __torch_dispatch__;

__torch_dispatch__ does …. __torch_dispatch__ is the only way to go from C++ back into Python…. At the end of __torch_dispatch__ tracing, AOT Autograd has a forward graph and joint forward-backward graph. AOT Autograd then uses a partitioner to isolate the forward and backward graph….

AOT leverages PrimTorch (torch/_prims), a minimal abstraction of ATen designed for graph-based workflows, reducing ~2k of PyTorch operators to ~250 streamlined primitives. While pointwise operators are memory-bound, these primitives map efficiently to low-level hardware instructions, enabling backend compilers to fuse ops. AOT may also use activation checkpointing to recompute intermediate outputs during the backward pass, trading computation for reduced memory usage. When paired with a fusing compiler, recomputed operators can be fused for both memory and runtime. Examples include NNC (fuses pointwise ops for CPU) and nvFuser (for CUDA).

TorchInductor (i.e. Inductor) is a PyTorch-native compiler … PT2 provide various back-ends…. i) training & inference: {TorchInductor, CUDA graphs, IPEX, ONNX}; ii) inference-only: {Torch-TensorRT, IPEX , TVM, OpenVINO}. Note that IPEX is for CPU. By using user-defined Triton kernels with torch.compile, you can integrate these optimized computations into your PyTorch model, potentially achieving significant performance improvement. That is, a kernel fusion and quantisation (see **) are core part of its LLVM-kind optimisation pipeline….

A graceful compilation requires some understanding of its internals …. Compilation stack is followed…. We shall actively use Triton because CUDA is closed…. Currently, torch.compile has the fullgraph parameter that controls the granularity of tracing. When set the default False, it attempts to discover compileable regions in the function. If set to True, the entire function must be capturable into a single graph. It also has the dynamic parameter. If the argment is set to ….

IV.

4.1. Wrap up

Specialised processors are equipped to offload computational workloads from the CPU and increase performance. GPUs are currently the most widely recognised accelerator, whereas application-specific integrated circuits (ASICs) offer greater efficiency despite their limited programmability. Notable ASIC-based hardware includes AWS Inferentia and Trainium, Google’s TPU, and Apple’s neural engine (ANE). As programs relying on specific accelerators are hardware-dependent and require compilation for the target machinery, they support CPU fallback for a set of operations (e.g. a custom activation function within a ML model) which cannot be accelerated.

4.2. LLM Training (Extra)

Hoffmann et al. (2022) revealed the “Scaling Laws for Neural Language Models”, introduced in Kaplan et al. (2020), by stating that the optimal compute utilisation in FLOPs is achieved by proportionally scaling model parameters and training tokens. In particular, they observed that extremely large LLMs, such as GPT-3 (170B) and Gopher (280B), have been underfitted due to an insufficient amount of training data relative to their parameter counts, and so trained Chinchilla (70B) with the same compute budget as Gopher but with 1.4 trillion tokens (i.e. 4x bigger), outperforming many parameter-heavy models on many benchmarks, except those related to Maths.

4.3. LLM Serving (Extra)

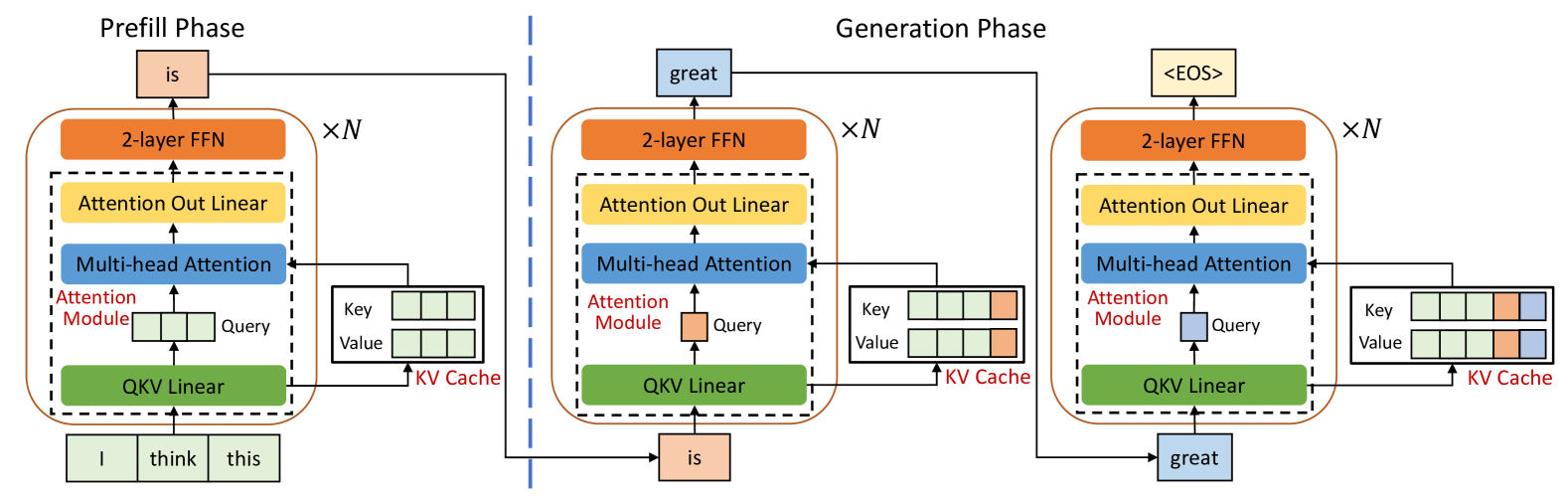

DeepMind’s new training principles hyped smaller LLMs, inspired by Transformer introduced in Vaswani et al. (2017), but inferencing at the production level needs further compute and memory optimisations, since they generate $K$ and $V$ autoregressively during the decoding. A KV cache is specifically designed to reduce FLOPs by storing precomputed vectors in VRAM, but its size scales quickly with $\text{batch size}$ * $\text{context size}$ * $2$ * $\text{num. layers}$ * $\text{dim. hidden}$ * $\text{sizeof(precision)}$, limiting large context sizes (e.g. ≥ 1M). For example, a LLaMA-2 (7B) loaded in FP16/BF16 (2 bytes/element) and a batch size of 1 needs 14GB for weights and 2GB for cache.

The MHA layer in Transformer computes each attention head $h_{i} = \text{Attn}(Q_{i}, V_{i}, K_{i}) = \text{SM}(X W_{i}^{(q)} (X W_{i}^{(k)})^T / \sqrt{d_{\text{head}}}) X W_{i}^{(v)}$, where $h_{i} \in \mathbb{R}^{\text{batch size} \,\times\, \text{seq. length} \,\times\, \text{dim. head}}$, but studies reported that using MHA with KV cache for longer sequences $X$ is infeasible. In fact, Shazeer (2019) proposed MQA that uses a single set of mean-pooled key-value pairs for all $h_{i}$, to lower memory requirements for such $X$ at the cost of a potential degradation in model accuracy. Ainslie et al. (2023), a group of fellows at Google, revisited MHA and MQA then presented GQA, which offers greater flexibility in pairing through an extra training with a checkpoint (i.e. via adaption).

Given the fact that language-modeling tasks often contain simpler subtasks, Leviathan et al. (2022) proposed speculative decoding as an alternative to autoregressive decoding algorithms (e.g. beam search). It reduces inter-token latency (rather than TTFT) in memory-bound LLM inference by i) drafting: generate a speculative sequence with a smaller, faster auxiliary model; ii) verification: the original model evaluates drafts in parallel using causal self-attention, accepting only matching tokens and discarding mismatches; Cai et al. (2024) developed Medusa which self-speculates and decodes using decoding heads, tree-based attention, and typical acceptance.

An iteration-level scheduling (i.e. continuous batching), proposed by Yu et al. (2022), reduced latency by determining the batch size at each iteration. However, existed systems reserved contiguous memory blocks for KV cache, leading to unused slots, internal/external fragmentation, and thus limiting throughput. Kwon et al. (2023) addressed these with i) PagedAttention, a non-contiguous on-demand physical memory allocation algorithm inspired by virtual memory and paging; and ii) KV cache sharing across and within requests; The authors released vLLM: A library for fast LLM serving - support PagedAttention and other useful techniques mentioned in this section.

As shown, fine-tuning on commodity hardware can also be optimised. LoRA, by Hu et al. (2021), accomplishes this by updating only a small set of low-rank matrices, leaving the original model intact, with extra memory and compute usages during inference. Moreover, Dettmers et al. (2023) introduced QLoRA with three key innovations: (i) the 4-bit NormalFloat (NF4) data type built on top of quantile quantisation; (ii) applying double quantisation on the 64 block constants; and (iii) leveraging a paged optimiser that offloads its states to RAM when needed; They observed that other quantisation methods often fail to properly dequantise weight values $W \sim N(0,1)$.

In GPU computing, a kernel is a small, parallelisable program (i.e. code blocks) running on cores, with each launch incurring overhead such as memory transfers. Kernel fusion lowers the overheads via consolidation of kernels into a single kernel, and Dao et al. (2022) proposed a fused I/O-aware attention that i) loads tiles of $Q, K, V$ from HBM to on-chip SRAM; ii) performs tile multiplications and incremental softmax reduction; and iii) obtains attention during the backward pass with a precomputed softmax normalising factor; Frameworks like PyTorch (i.e. automatic during compilation), CUDA, and TensorRT (i.e. for self-implementation) support kernel fusion.

Some CS-related concepts and terminologies may be reviewed in another posts….

(C:)

(C:) cs300

cs300 501. functional analysis

501. functional analysis

I gathered words solely for my own purposes without any intention to break the rigorosity of the subjects.

Well, I prefer eating corn in spiral .